diff --git a/.github/workflows/test.yml b/.github/workflows/test.yml

new file mode 100644

index 00000000..62fc70a8

--- /dev/null

+++ b/.github/workflows/test.yml

@@ -0,0 +1,86 @@

+name: Run Tests

+

+on:

+ push:

+ branches: [ main ]

+ pull_request:

+ branches: [ main ]

+

+jobs:

+ test:

+ runs-on: ubuntu-latest

+ strategy:

+ matrix:

+ python-version: ['3.12']

+

+ steps:

+ - uses: actions/checkout@v4

+

+ - name: Set up Python ${{ matrix.python-version }}

+ uses: actions/setup-python@v4

+ with:

+ python-version: ${{ matrix.python-version }}

+

+ - name: Cache pip packages

+ uses: actions/cache@v3

+ with:

+ path: ~/.cache/pip

+ key: ${{ runner.os }}-pip-${{ hashFiles('**/requirements.txt') }}

+ restore-keys: |

+ ${{ runner.os }}-pip-

+

+ - name: Install dependencies

+ run: |

+ python -m pip install --upgrade pip

+ pip install -r requirements.txt

+ pip install -r tests/requirements.txt

+

+ - name: Run unit tests

+ run: |

+ # Run quick CI tests

+ python tests/test_ci_quick.py

+

+ # Run plugin tests with pytest if available

+ python -m pytest tests/test_plugins.py -v --tb=short || python tests/test_plugins.py

+

+ # Run approach tests

+ python tests/test_approaches.py

+

+ integration-test:

+ runs-on: ubuntu-latest

+ needs: test

+ if: github.event_name == 'pull_request' && github.event.pull_request.head.repo.full_name == github.repository

+ # Only run integration tests on PRs from the same repository (not forks)

+ # This ensures secrets are available

+

+ steps:

+ - uses: actions/checkout@v4

+

+ - name: Set up Python

+ uses: actions/setup-python@v4

+ with:

+ python-version: '3.12'

+

+ - name: Install dependencies

+ run: |

+ python -m pip install --upgrade pip

+ pip install -r requirements.txt

+

+ - name: Run integration test with OpenAI

+ if: env.OPENAI_API_KEY != ''

+ run: |

+ # Start OptILLM server

+ python optillm.py &

+ SERVER_PID=$!

+

+ # Wait for server

+ sleep 5

+

+ # Run simple integration test

+ python tests/test.py --approaches none --single-test "Simple Math Problem" --base-url http://localhost:8000/v1 --model gpt-4o-mini || true

+

+ # Stop server

+ kill $SERVER_PID || true

+ env:

+ OPENAI_API_KEY: ${{ secrets.OPENAI_API_KEY }}

+ continue-on-error: true

\ No newline at end of file

diff --git a/.gitignore b/.gitignore

index 70e8202d..01215d5b 100644

--- a/.gitignore

+++ b/.gitignore

@@ -170,3 +170,4 @@ cython_debug/

scripts/results/

results/

+test_results.json

diff --git a/README.md b/README.md

index 5dd4069c..3f05f324 100644

--- a/README.md

+++ b/README.md

@@ -377,6 +377,7 @@ Check this log file for connection issues, tool execution errors, and other diag

| Read URLs | `readurls` | Reads all URLs found in the request, fetches the content at the URL and adds it to the context |

| Execute Code | `executecode` | Enables use of code interpreter to execute python code in requests and LLM generated responses |

| JSON | `json` | Enables structured outputs using the outlines library, supports pydantic types and JSON schema |

+| GenSelect | `genselect` | Generative Solution Selection - generates multiple candidates and selects the best based on quality criteria |

## Available parameters

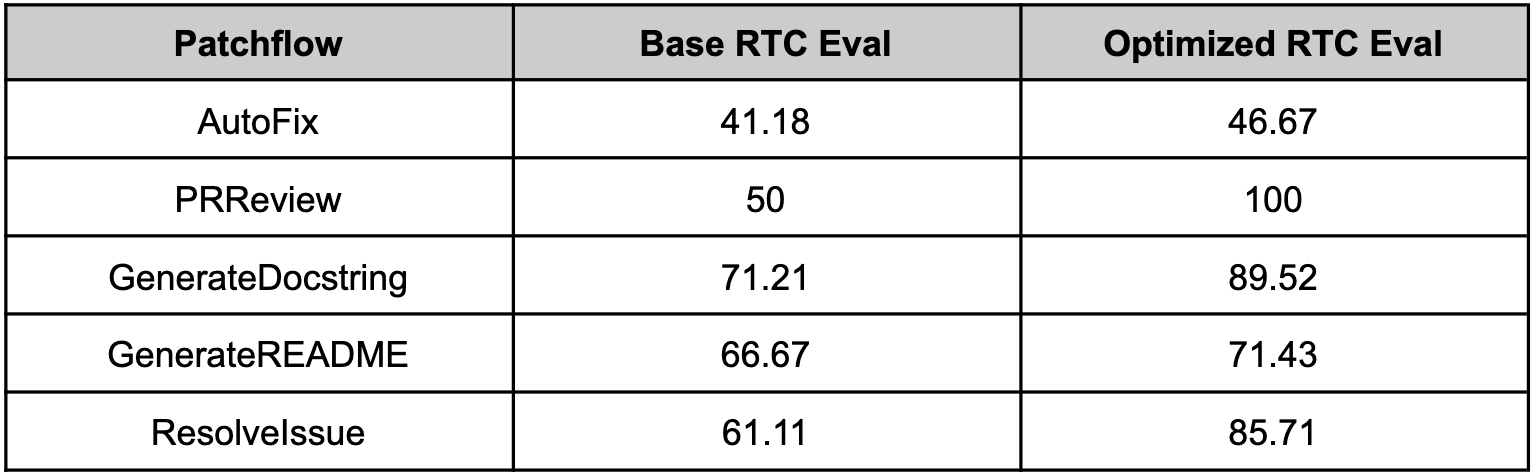

@@ -564,6 +565,46 @@ called patchflows. We saw huge performance gains across all the supported patchf

+## Testing

+

+OptILLM includes a comprehensive test suite to ensure reliability and compatibility.

+

+### Running Tests

+

+The main test suite can be run from the project root:

+```bash

+# Test all approaches with default test cases

+python tests/test.py

+

+# Test specific approaches

+python tests/test.py --approaches moa bon mcts

+

+# Run a single test

+python tests/test.py --single-test "Simple Math Problem"

+```

+

+### Unit and Integration Tests

+

+Additional tests are available in the `tests/` directory:

+```bash

+# Run all tests (requires pytest)

+./tests/run_tests.sh

+

+# Run specific test modules

+pytest tests/test_plugins.py -v

+pytest tests/test_api_compatibility.py -v

+```

+

+### CI/CD

+

+All tests are automatically run on pull requests via GitHub Actions. The workflow tests:

+- Multiple Python versions (3.10, 3.11, 3.12)

+- Unit tests for plugins and core functionality

+- API compatibility tests

+- Integration tests with various approaches

+

+See `tests/README.md` for more details on the test structure and how to write new tests.

+

## References

- [Eliciting Fine-Tuned Transformer Capabilities via Inference-Time Techniques](https://arxiv.org/abs/2506.08060)

- [AutoThink: efficient inference for reasoning LLMs](https://dx.doi.org/10.2139/ssrn.5253327) - [Implementation](optillm/autothink)

@@ -587,6 +628,7 @@ called patchflows. We saw huge performance gains across all the supported patchf

- [Unsupervised Evaluation of Code LLMs with Round-Trip Correctness](https://arxiv.org/abs/2402.08699) - [Inspired the implementation of rto](optillm/rto.py)

- [Patched MOA: optimizing inference for diverse software development tasks](https://arxiv.org/abs/2407.18521) - [Implementation](optillm/moa.py)

- [Patched RTC: evaluating LLMs for diverse software development tasks](https://arxiv.org/abs/2407.16557) - [Implementation](ptillm/rto.py)

+- [AIMO-2 Winning Solution: Building State-of-the-Art Mathematical Reasoning Models with OpenMathReasoning dataset](https://arxiv.org/abs/2504.16891) - [Implementation](optillm/plugins/genselect_plugin.py)

## Citation

diff --git a/optillm.py b/optillm.py

index ef421160..32d28c4b 100644

--- a/optillm.py

+++ b/optillm.py

@@ -302,9 +302,9 @@ def execute_single_approach(approach, system_prompt, initial_query, client, mode

if hasattr(request, 'json'):

data = request.get_json()

messages = data.get('messages', [])

- # Copy all parameters except 'stream', 'model' , 'n' and 'messages'

+ # Copy all parameters except 'stream', 'model' and 'messages'

kwargs = {k: v for k, v in data.items()

- if k not in ['model', 'messages', 'stream', 'n', 'optillm_approach']}

+ if k not in ['model', 'messages', 'stream', 'optillm_approach']}

response = none_approach(original_messages=messages, client=client, model=model, **kwargs)

# For none approach, we return the response and a token count of 0

# since the full token count is already in the response

@@ -641,17 +641,8 @@ def proxy():

contains_none = any(approach == 'none' for approach in approaches)

if operation == 'SINGLE' and approaches[0] == 'none':

- # For none approach with n>1, make n separate calls

- if n > 1:

- responses = []

- completion_tokens = 0

- for _ in range(n):

- result, tokens = execute_single_approach(approaches[0], system_prompt, initial_query, client, model, request_config)

- responses.append(result)

- completion_tokens += tokens

- result = responses

- else:

- result, completion_tokens = execute_single_approach(approaches[0], system_prompt, initial_query, client, model, request_config)

+ # Pass through the request including the n parameter

+ result, completion_tokens = execute_single_approach(approaches[0], system_prompt, initial_query, client, model, request_config)

logger.debug(f'Direct proxy response: {result}')

diff --git a/optillm/plugins/genselect_plugin.py b/optillm/plugins/genselect_plugin.py

new file mode 100644

index 00000000..f78aac3d

--- /dev/null

+++ b/optillm/plugins/genselect_plugin.py

@@ -0,0 +1,269 @@

+"""

+GenSelect Plugin for OptILLM

+

+This plugin implements the Generative Solution Selection (GenSelect) approach from

+the paper "AIMO-2 Winning Solution: Building State-of-the-Art Mathematical Reasoning

+Models with OpenMathReasoning dataset" (arXiv:2504.16891).

+

+GenSelect generates multiple candidate solutions and uses an LLM to compare and

+select the best one based on quality criteria. Unlike majority voting which counts

+answer frequencies, GenSelect evaluates the entire response quality.

+"""

+

+import logging

+from typing import Tuple, Dict, Any, List, Optional

+import json

+

+logger = logging.getLogger(__name__)

+

+# Plugin identifier

+SLUG = "genselect"

+

+# Default configuration

+DEFAULT_NUM_CANDIDATES = 4

+DEFAULT_TEMPERATURE = 0.7

+DEFAULT_COMPARISON_TEMPERATURE = 0.3

+DEFAULT_COMPARISON_MODE = "batch" # "batch" or "tournament"

+DEFAULT_INCLUDE_REASONING = False

+

+def create_comparison_prompt(candidates: List[str], query: str, comparison_mode: str = "batch") -> str:

+ """

+ Create a prompt for comparing candidate solutions.

+

+ Args:

+ candidates: List of candidate responses

+ query: The original user query

+ comparison_mode: "batch" for all at once, "tournament" for pairwise

+

+ Returns:

+ The comparison prompt

+ """

+ if comparison_mode == "batch":

+ prompt = f"""You are an expert evaluator tasked with selecting the best response to the following query:

+

+Query: {query}

+

+I will provide you with {len(candidates)} different candidate responses. Please analyze each one carefully and select the best response based on the following criteria:

+

+1. **Correctness and Accuracy**: Is the response factually correct and accurate?

+2. **Completeness**: Does it fully address all aspects of the query?

+3. **Clarity**: Is the explanation clear and easy to understand?

+4. **Logical Coherence**: Is the reasoning sound and well-structured?

+5. **Practical Value**: Does it provide useful, actionable information?

+

+For coding problems, also consider:

+- Code correctness and efficiency

+- Best practices and style

+- Error handling

+

+Here are the candidate responses:

+

+"""

+ for i, candidate in enumerate(candidates, 1):

+ prompt += f"=== Candidate {i} ===\n{candidate}\n\n"

+

+ prompt += """Please analyze all candidates and provide:

+1. A brief comparison highlighting the strengths and weaknesses of each candidate

+2. Your selection of the best candidate (specify the number)

+3. A clear explanation of why you selected that candidate

+

+Format your response as:

+COMPARISON:

+[Your comparison analysis]

+

+BEST CANDIDATE: [number]

+

+REASONING:

+[Your explanation for the selection]"""

+

+ else: # tournament mode - for future enhancement

+ # This would implement pairwise comparisons

+ # For now, we'll use batch mode as default

+ return create_comparison_prompt(candidates, query, "batch")

+

+ return prompt

+

+def parse_selection_response(response: str, num_candidates: int) -> Tuple[int, str]:

+ """

+ Parse the selection response to extract the chosen candidate number and reasoning.

+

+ Args:

+ response: The LLM's comparison response

+ num_candidates: Total number of candidates

+

+ Returns:

+ Tuple of (selected_index, reasoning)

+ """

+ import re

+

+ # Look for "BEST CANDIDATE: X" pattern

+ match = re.search(r'BEST CANDIDATE:\s*(\d+)', response, re.IGNORECASE)

+ if match:

+ candidate_num = int(match.group(1))

+ # Convert to 0-based index

+ selected_index = candidate_num - 1

+

+ # Validate the selection

+ if 0 <= selected_index < num_candidates:

+ # Extract reasoning if available

+ reasoning_match = re.search(r'REASONING:\s*(.+)', response, re.IGNORECASE | re.DOTALL)

+ reasoning = reasoning_match.group(1).strip() if reasoning_match else "No explicit reasoning provided"

+

+ logger.info(f"Selected candidate {candidate_num} based on comparison")

+ return selected_index, reasoning

+

+ # Fallback: Look for other patterns like "Candidate X is the best"

+ patterns = [

+ r'[Cc]andidate\s+(\d+)\s+is\s+(?:the\s+)?best',

+ r'[Ii]\s+(?:would\s+)?select\s+[Cc]andidate\s+(\d+)',

+ r'[Tt]he\s+best\s+(?:response|candidate)\s+is\s+(?:number\s+)?(\d+)',

+ ]

+

+ for pattern in patterns:

+ match = re.search(pattern, response)

+ if match:

+ candidate_num = int(match.group(1))

+ selected_index = candidate_num - 1

+ if 0 <= selected_index < num_candidates:

+ logger.info(f"Selected candidate {candidate_num} using fallback pattern")

+ return selected_index, "Selection extracted from response pattern"

+

+ # If no clear selection found, log warning and return first candidate

+ logger.warning("Could not parse selection from comparison response, defaulting to first candidate")

+ return 0, "Failed to parse selection, defaulted to first candidate"

+

+def run(

+ system_prompt: str,

+ initial_query: str,

+ client,

+ model: str,

+ request_config: Dict[str, Any] = None

+) -> Tuple[str, int]:

+ """

+ Main entry point for the GenSelect plugin.

+

+ Generates multiple candidate solutions and uses LLM comparison to select the best one.

+

+ Args:

+ system_prompt: System prompt for the model

+ initial_query: User's query

+ client: OpenAI-compatible client instance

+ model: Model identifier

+ request_config: Additional configuration parameters

+

+ Returns:

+ Tuple of (response_text, completion_tokens_used)

+ """

+ logger.info("Starting GenSelect process")

+

+ # Extract configuration

+ config = request_config or {}

+ num_candidates = config.get('num_candidates', DEFAULT_NUM_CANDIDATES)

+ temperature = config.get('temperature', DEFAULT_TEMPERATURE)

+ comparison_temperature = config.get('comparison_temperature', DEFAULT_COMPARISON_TEMPERATURE)

+ comparison_mode = config.get('comparison_mode', DEFAULT_COMPARISON_MODE)

+ include_reasoning = config.get('include_reasoning', DEFAULT_INCLUDE_REASONING)

+ max_tokens = config.get('max_tokens', 4096)

+

+ # Validate num_candidates is at least 2

+ num_candidates = max(2, num_candidates)

+

+ logger.info(f"Generating {num_candidates} candidates with temperature={temperature}")

+

+ # Prepare messages for candidate generation

+ messages = [

+ {"role": "system", "content": system_prompt},

+ {"role": "user", "content": initial_query}

+ ]

+

+ candidates = []

+ total_tokens = 0

+

+ try:

+ # Try to generate candidates using n parameter for efficiency

+ response = client.chat.completions.create(

+ model=model,

+ messages=messages,

+ n=num_candidates,

+ temperature=temperature,

+ max_tokens=max_tokens

+ )

+

+ candidates = [choice.message.content for choice in response.choices]

+ total_tokens += response.usage.completion_tokens

+

+ logger.info(f"Generated {len(candidates)} candidates using n parameter. Tokens: {total_tokens}")

+

+ except Exception as e:

+ logger.warning(f"n parameter not supported: {str(e)}")

+ logger.info("Falling back to sequential generation")

+

+ # Fallback: Generate candidates one by one

+ for i in range(num_candidates):

+ try:

+ response = client.chat.completions.create(

+ model=model,

+ messages=messages,

+ temperature=temperature,

+ max_tokens=max_tokens

+ )

+ candidates.append(response.choices[0].message.content)

+ total_tokens += response.usage.completion_tokens

+ logger.debug(f"Generated candidate {i+1}/{num_candidates}")

+

+ except Exception as gen_error:

+ logger.error(f"Error generating candidate {i+1}: {str(gen_error)}")

+ continue

+

+ if len(candidates) < 2:

+ logger.error(f"Insufficient candidates generated ({len(candidates)})")

+ if candidates:

+ return candidates[0], total_tokens

+ return "Error: Could not generate sufficient candidates for selection", total_tokens

+

+ # Create comparison prompt

+ comparison_prompt = create_comparison_prompt(candidates, initial_query, comparison_mode)

+

+ # Get LLM to compare and select

+ logger.info("Comparing candidates for selection")

+

+ try:

+ comparison_messages = [

+ {"role": "system", "content": "You are an expert evaluator skilled at comparing and selecting high-quality responses."},

+ {"role": "user", "content": comparison_prompt}

+ ]

+

+ comparison_response = client.chat.completions.create(

+ model=model,

+ messages=comparison_messages,

+ temperature=comparison_temperature,

+ max_tokens=2048 # Comparison doesn't need as many tokens

+ )

+

+ selection_response = comparison_response.choices[0].message.content

+ total_tokens += comparison_response.usage.completion_tokens

+

+ # Parse the selection

+ selected_index, reasoning = parse_selection_response(selection_response, len(candidates))

+

+ # Get the selected candidate

+ selected_candidate = candidates[selected_index]

+

+ logger.info(f"GenSelect Summary:")

+ logger.info(f" - Generated {len(candidates)} candidates")

+ logger.info(f" - Selected candidate {selected_index + 1}")

+ logger.info(f" - Total tokens used: {total_tokens}")

+

+ # Optionally include reasoning in the response

+ if include_reasoning:

+ final_response = f"{selected_candidate}\n\n---\n**GenSelect Reasoning**: {reasoning}"

+ else:

+ final_response = selected_candidate

+

+ return final_response, total_tokens

+

+ except Exception as e:

+ logger.error(f"Error during comparison: {str(e)}")

+ # Fallback to first candidate

+ logger.warning("Falling back to first candidate due to comparison error")

+ return candidates[0], total_tokens

\ No newline at end of file

diff --git a/optillm/plugins/majority_voting_plugin.py b/optillm/plugins/majority_voting_plugin.py

index 311072b7..b7ee484b 100644

--- a/optillm/plugins/majority_voting_plugin.py

+++ b/optillm/plugins/majority_voting_plugin.py

@@ -1,158 +1,77 @@

"""

Majority Voting Plugin for OptILLM

-This plugin implements a majority voting approach where k candidate solutions

-are generated and the most frequent answer is selected. This is particularly

-effective for problems with discrete answers (math, coding, multiple choice).

-

-The plugin uses the OpenAI API's n parameter to generate multiple responses

-efficiently in a single API call.

+Generic implementation that generates multiple candidates and selects

+the most common response through simple voting.

"""

import re

import logging

from typing import Tuple, Dict, Any, List, Optional

from collections import Counter

-import json

logger = logging.getLogger(__name__)

# Plugin identifier

SLUG = "majority_voting"

-# Default number of candidates to generate

-DEFAULT_K = 6

+# Default configuration

+DEFAULT_K = 8

+DEFAULT_TEMPERATURE = 0.6 # Unified temperature for consistency

-# Default temperature for candidate generation

-DEFAULT_TEMPERATURE = 0.6

-def extract_answer(text: str) -> Optional[str]:

+def normalize_response(response: str) -> str:

"""

- Extract the answer from a response text.

-

- This function looks for common answer patterns in the response:

- 1. Text after "Answer:" or "Final Answer:"

- 2. Text within \\boxed{} (LaTeX format)

- 3. Numbers at the end of the response

- 4. The last line if it's short (likely the answer)

-

- Args:

- text: The response text to extract answer from

-

- Returns:

- The extracted answer or None if no clear answer found

+ Basic normalization for comparing responses.

+ Removes extra whitespace, punctuation at ends, and lowercases.

"""

- # Remove any trailing whitespace

- text = text.strip()

-

- # Pattern 1: Look for LaTeX boxed format first (handle both \boxed and \\boxed)

- boxed_match = re.search(r'\\{1,2}boxed\{([^}]+)\}', text)

- if boxed_match:

- answer = boxed_match.group(1).strip()

- logger.debug(f"Extracted boxed answer: {answer}")

- return answer

-

- # Pattern 2: Look for "Answer:" or "Final Answer:" patterns

- answer_patterns = [

- r'(?:final\s+)?answer\s*[:=]\s*(.+?)(?:\n|$)',

- r'(?:the\s+)?(?:final\s+)?answer\s+is\s*[:=]?\s*(.+?)(?:\n|$)',

- r'(?:therefore|thus|so)\s*,?\s*(.+?)(?:\n|$)'

- ]

-

- for pattern in answer_patterns:

- match = re.search(pattern, text, re.IGNORECASE)

- if match:

- answer = match.group(1).strip()

- # Clean up the answer

- answer = answer.rstrip('.,;')

- if answer:

- logger.debug(f"Extracted answer using pattern: {answer}")

- return answer

+ if not response:

+ return ""

- # Pattern 3: Look for standalone numbers (useful for math problems)

- # Check the last few lines for a number

- lines = text.split('\n')

- for line in reversed(lines[-3:]): # Check last 3 lines

- line = line.strip()

- # Match numbers (including decimals, fractions, negative numbers)

- number_match = re.match(r'^-?\d+\.?\d*$|^-?\d+/\d+$', line)

- if number_match:

- logger.debug(f"Extracted number answer: {line}")

- return line

+ # Remove thinking blocks if present

+ response = re.sub(r'.*?', '', response, flags=re.DOTALL)

- # Pattern 4: For multiple choice, look for single letter answers

- # Check this before the generic last line check

- mc_patterns = [

- r'(?:the\s+)?(?:correct\s+)?(?:answer|option)\s+is\s+([A-E])(?:\b|$)',

- r'(?:choose|select|pick)\s+(?:option\s+)?([A-E])(?:\b|$)',

- r'\b([A-E])\s*\)\s*[A-Za-z]+.*is\s+(?:the\s+)?(?:correct|right)',

- r'^([A-E])$', # Just a letter on its own line

- ]

+ # Basic normalization

+ response = response.strip()

+ response = response.lower()

- for pattern in mc_patterns:

- mc_match = re.search(pattern, text, re.IGNORECASE | re.MULTILINE)

- if mc_match:

- answer = mc_match.group(1).upper()

- logger.debug(f"Extracted multiple choice answer: {answer}")

- return answer

+ # Remove trailing punctuation

+ response = response.rstrip('.,;:!?')

- # Pattern 5: If the last line is short (< 50 chars), it might be the answer

- if lines:

- last_line = lines[-1].strip()

- if last_line and len(last_line) < 50 and not last_line.endswith(':'):

- logger.debug(f"Using last line as answer: {last_line}")

- return last_line

+ # Normalize whitespace

+ response = ' '.join(response.split())

- logger.warning("Could not extract a clear answer from the response")

- return None

+ return response

+

-def normalize_answer(answer: str) -> str:

+def extract_final_answer(response: str) -> str:

"""

- Normalize an answer for comparison.

-

- This helps ensure that equivalent answers are treated as the same:

- - Converts to lowercase

- - Removes extra whitespace

- - Removes quotes

- - Normalizes number formats

-

- Args:

- answer: The answer to normalize

-

- Returns:

- The normalized answer

+ Try to extract just the final answer from a response.

+ This is generic and looks for common patterns.

"""

- # Convert to lowercase

- answer = answer.lower().strip()

-

- # Remove quotes

- answer = answer.strip('"\'')

-

- # Normalize whitespace

- answer = ' '.join(answer.split())

-

- # Try to normalize numbers

- try:

- # Check if it's a float

- if '.' in answer:

- num = float(answer)

- # Format to remove trailing zeros

- answer = f"{num:g}"

- else:

- # Try integer

- num = int(answer)

- answer = str(num)

- except ValueError:

- # Not a number, keep as is

- pass

+ if not response:

+ return response

+

+ # Remove thinking blocks

+ response = re.sub(r'.*?', '', response, flags=re.DOTALL).strip()

+

+ # Look for common answer patterns

+ patterns = [

+ r'(?:final answer|answer):\s*(.+?)(?:\n|$)',

+ r'(?:the answer is|answer is)\s*(.+?)(?:\n|$)',

+ r'###\s*(.+?)(?:\n|$)', # Common in math problems

+ r'^([A-E])\b', # Single letter at start

+ r'\b([A-E])\b\s*$', # Single letter at end

+ ]

- # Handle yes/no variations

- if answer in ['yes', 'yeah', 'yep', 'true', 'correct']:

- answer = 'yes'

- elif answer in ['no', 'nope', 'false', 'incorrect']:

- answer = 'no'

+ for pattern in patterns:

+ match = re.search(pattern, response, re.IGNORECASE | re.MULTILINE)

+ if match:

+ return match.group(1).strip()

- return answer

+ # If no pattern found, return the whole response

+ return response

+

def run(

system_prompt: str,

@@ -162,34 +81,14 @@ def run(

request_config: Dict[str, Any] = None

) -> Tuple[str, int]:

"""

- Main entry point for the majority voting plugin.

-

- Generates k candidate solutions and returns the most frequent answer.

-

- Args:

- system_prompt: System prompt for the model

- initial_query: User's query

- client: OpenAI-compatible client instance

- model: Model identifier

- request_config: Additional configuration parameters

-

- Returns:

- Tuple of (response_text, completion_tokens_used)

+ Generic majority voting implementation.

"""

logger.info("Starting majority voting process")

- # Extract parameters from request_config

- k = DEFAULT_K

- temperature = DEFAULT_TEMPERATURE

-

- if request_config:

- k = request_config.get('k', DEFAULT_K)

- # Allow overriding temperature if needed

- temperature = request_config.get('temperature', DEFAULT_TEMPERATURE)

- # Respect max_tokens if provided

- max_tokens = request_config.get('max_tokens', 4096)

- else:

- max_tokens = 4096

+ # Extract parameters

+ k = request_config.get('k', DEFAULT_K) if request_config else DEFAULT_K

+ temperature = request_config.get('temperature', DEFAULT_TEMPERATURE) if request_config else DEFAULT_TEMPERATURE

+ max_tokens = request_config.get('max_tokens', 4096) if request_config else 4096

logger.info(f"Generating {k} candidates with temperature={temperature}")

@@ -199,8 +98,12 @@ def run(

{"role": "user", "content": initial_query}

]

+ # Generate candidates

+ candidates = []

+ total_tokens = 0

+

try:

- # Generate k candidates in a single API call using n parameter

+ # Try parallel generation first

response = client.chat.completions.create(

model=model,

messages=messages,

@@ -209,20 +112,12 @@ def run(

max_tokens=max_tokens

)

- # Extract all candidate responses

candidates = [choice.message.content for choice in response.choices]

total_tokens = response.usage.completion_tokens

- logger.info(f"Generated {len(candidates)} candidates using n parameter. Tokens used: {total_tokens}")

-

except Exception as e:

- logger.warning(f"n parameter not supported by provider: {str(e)}")

- logger.info(f"Falling back to generating {k} candidates one by one")

-

- # Fallback: Generate candidates one by one in a loop

- candidates = []

- total_tokens = 0

-

+ logger.warning(f"Parallel generation failed: {str(e)}")

+ # Fallback to sequential

for i in range(k):

try:

response = client.chat.completions.create(

@@ -233,61 +128,45 @@ def run(

)

candidates.append(response.choices[0].message.content)

total_tokens += response.usage.completion_tokens

- logger.debug(f"Generated candidate {i+1}/{k}")

-

- except Exception as fallback_error:

- logger.error(f"Error generating candidate {i+1}: {str(fallback_error)}")

+ except Exception as err:

+ logger.error(f"Error generating candidate {i+1}: {str(err)}")

continue

-

- if not candidates:

- logger.error("Failed to generate any candidates")

- return "Error: Could not generate any candidates", 0

-

- logger.info(f"Generated {len(candidates)} candidates using fallback method. Total tokens used: {total_tokens}")

- # Extract answers from each candidate

- answers = []

- answer_to_response = {} # Map normalized answers to full responses

+ if not candidates:

+ return "Error: Could not generate any candidates", 0

+

+ # Extract and normalize answers for voting

+ answer_votes = Counter()

+ answer_to_responses = {}

for i, candidate in enumerate(candidates):

- answer = extract_answer(candidate)

- if answer:

- normalized = normalize_answer(answer)

- answers.append(normalized)

- # Keep the first full response for each unique answer

- if normalized not in answer_to_response:

- answer_to_response[normalized] = candidate

- logger.debug(f"Candidate {i+1} answer: {answer} (normalized: {normalized})")

+ # Try to extract just the answer part

+ answer = extract_final_answer(candidate)

+

+ # Normalize for comparison

+ normalized = normalize_response(answer)

+

+ if normalized:

+ answer_votes[normalized] += 1

+

+ # Keep track of original responses for each normalized answer

+ if normalized not in answer_to_responses:

+ answer_to_responses[normalized] = []

+ answer_to_responses[normalized].append(candidate)

+

+ logger.debug(f"Candidate {i+1}: '{answer}' -> '{normalized}'")

else:

- logger.warning(f"Could not extract answer from candidate {i+1}")

-

- if not answers:

- logger.warning("No answers could be extracted from any candidate")

- # Return the first candidate as fallback

- return candidates[0] if candidates else "Error: No candidates generated", total_tokens

-

- # Count answer frequencies

- answer_counts = Counter(answers)

- logger.info(f"Answer distribution: {dict(answer_counts)}")

-

- # Get the most common answer

- most_common_answer, count = answer_counts.most_common(1)[0]

- confidence = count / len(answers)

-

- logger.info(f"Most common answer: '{most_common_answer}' with {count}/{len(answers)} votes ({confidence:.1%} confidence)")

+ logger.warning(f"Could not extract/normalize answer from candidate {i+1}")

- # Get the full response corresponding to the most common answer

- winning_response = answer_to_response.get(most_common_answer, candidates[0])

-

- # Log voting summary to console instead of adding to response

- logger.info("Majority Voting Summary:")

- logger.info(f" - Generated {len(candidates)} candidates")

- logger.info(f" - Most common answer: {most_common_answer}")

- logger.info(f" - Votes: {count}/{len(answers)} ({confidence:.1%} confidence)")

-

- if len(answer_counts) > 1:

- other_answers = [f"{ans} ({cnt} votes)" for ans, cnt in answer_counts.items() if ans != most_common_answer]

- logger.info(f" - Other answers: {', '.join(other_answers)}")

-

- # Return only the full response from the winning answer

- return winning_response, total_tokens

\ No newline at end of file

+ # Select the most voted answer

+ if answer_votes:

+ most_common_normalized, count = answer_votes.most_common(1)[0]

+ logger.info(f"Most common answer: '{most_common_normalized}' with {count}/{k} votes")

+

+ # Return the first original response that mapped to this answer

+ winning_responses = answer_to_responses[most_common_normalized]

+ return winning_responses[0], total_tokens

+ else:

+ # If no answers could be extracted, return the first candidate

+ logger.warning("No answers could be extracted, returning first candidate")

+ return candidates[0], total_tokens

\ No newline at end of file

diff --git a/pyproject.toml b/pyproject.toml

index 3ddb789b..5c163015 100644

--- a/pyproject.toml

+++ b/pyproject.toml

@@ -4,7 +4,7 @@ build-backend = "setuptools.build_meta"

[project]

name = "optillm"

-version = "0.1.22"

+version = "0.1.23"

description = "An optimizing inference proxy for LLMs."

readme = "README.md"

license = "Apache-2.0"

diff --git a/scripts/eval_optillmbench.py b/scripts/eval_optillmbench.py

index 58eac413..eb84a806 100644

--- a/scripts/eval_optillmbench.py

+++ b/scripts/eval_optillmbench.py

@@ -67,6 +67,15 @@

("majority_voting_9", "Majority Voting with k=9", {"k": 9}),

]

+# Default test-time compute configuration for standard evaluation

+# Using n=5 for all approaches to ensure fair comparison and memory efficiency

+DEFAULT_TEST_TIME_COMPUTE = [

+ ("avg@5", "Average of 5 parallel responses", {"n": 5}),

+ ("pass@5", "Pass@5 - success if any of 5 is correct", {"n": 5}),

+ ("maj@5", "Majority Voting with k=5", {"k": 5}),

+ ("genselect@5", "GenSelect with 5 candidates", {"num_candidates": 5})

+]

+

def load_optillm_bench() -> datasets.Dataset:

"""Load the OptiLLM Bench dataset."""

try:

@@ -318,67 +327,243 @@ def evaluate_model(

# Prepare the dataset

examples = dataset if max_samples is None else dataset.select(range(max_samples))

- # Create model name with approach - handle special cases

- if approach == "none":

+ # Check if we need to do multiple runs (for pass@1 calculation)

+ num_runs = approach_extra_body.get("num_runs", 1) if approach_extra_body else 1

+ # Check if we're using n parameter for parallel generation

+ n_param = approach_extra_body.get("n", 1) if approach_extra_body else 1

+

+ # Handle special approach names and create model names

+ if approach.startswith("avg@") or approach.startswith("pass@"):

+ # For avg@N and pass@N, use base model without any prefix

full_model_name = model

+ elif approach.startswith("maj@"):

+ # For majority voting, use the plugin prefix

+ full_model_name = f"majority_voting-{model}"

+ elif approach.startswith("genselect@"):

+ # For genselect, use the plugin prefix

+ full_model_name = f"genselect-{model}"

elif approach.startswith("thinkdeeper_"):

# For thinkdeeper, use base model name (decoding is passed in extra_body)

full_model_name = model

- elif approach.startswith("majority_voting_"):

- # For majority voting, use majority_voting prefix

+ elif approach.startswith("majority_voting"):

+ # For other majority voting configurations

full_model_name = f"majority_voting-{model}"

+ elif approach == "none":

+ # For explicit none approach

+ full_model_name = model

else:

# Standard approach prefix

full_model_name = f"{approach}-{model}"

for example in tqdm(examples, desc=f"Evaluating {approach}"):

- try:

- # Get appropriate prompt for the category

- prompt = get_prompt_for_category(example['question'], example['category'])

-

- # Record start time

- start_time = time.time()

-

- # Prepare extra_body parameters

- extra_body = {"spl_learning": False}

- if approach_extra_body:

- extra_body.update(approach_extra_body)

-

- # Make API call

- response = client.chat.completions.create(

- model=full_model_name,

- messages=[

- {"role": "system", "content": "You are a helpful AI assistant focused on providing precise answers in the requested format."},

- {"role": "user", "content": prompt}

- ],

- temperature=0.2,

- max_tokens=4096,

- extra_body=extra_body,

- )

-

- # Calculate time taken

- time_taken = time.time() - start_time

-

- # Get the response text

- response_text = response.choices[0].message.content

-

- # Also store the raw response for reference

- raw_response = response_text

+ # For avg@N and pass@N with n parameter, we generate n responses in parallel

+ if n_param > 1 and (approach.startswith("avg@") or approach.startswith("pass@")):

+ try:

+ # Get appropriate prompt for the category

+ prompt = get_prompt_for_category(example['question'], example['category'])

+

+ # Record start time

+ start_time = time.time()

+

+ # Prepare extra_body parameters (excluding n)

+ extra_body = {"spl_learning": False}

+ if approach_extra_body:

+ extra_body_clean = {k: v for k, v in approach_extra_body.items() if k not in ["n", "approach"]}

+ extra_body.update(extra_body_clean)

+

+ # Generate n responses - optillm handles n parameter properly

+ responses = []

+ try:

+ # Make API call with n parameter

+ response = client.chat.completions.create(

+ model=full_model_name,

+ messages=[

+ {"role": "system", "content": "You are a helpful AI assistant focused on providing precise answers in the requested format."},

+ {"role": "user", "content": prompt}

+ ],

+ n=n_param,

+ temperature=0.6, # Unified temperature for all approaches

+ max_tokens=4096,

+ extra_body=extra_body,

+ )

+

+ # Extract responses - optillm returns OpenAI-compatible format

+ responses = [(choice.message.content, time.time() - start_time) for choice in response.choices]

+ logger.debug(f"Generated {len(responses)} responses using n={n_param}")

+

+ except Exception as e:

+ # If n parameter fails, fall back to sequential generation

+ logger.warning(f"Parallel generation failed: {type(e).__name__}: {str(e)}")

+ logger.info("Falling back to sequential generation")

+ for i in range(n_param):

+ try:

+ single_start = time.time()

+ response = client.chat.completions.create(

+ model=full_model_name,

+ messages=[

+ {"role": "system", "content": "You are a helpful AI assistant focused on providing precise answers in the requested format."},

+ {"role": "user", "content": prompt}

+ ],

+ temperature=0.6,

+ max_tokens=4096,

+ extra_body=extra_body,

+ )

+ response_text = response.choices[0].message.content

+ responses.append((response_text, time.time() - single_start))

+ except Exception as seq_error:

+ logger.error(f"Sequential generation {i+1}/{n_param} failed: {seq_error}")

+ responses.append((None, 0)) # Add failed response

+

+ # Calculate total time

+ time_taken = time.time() - start_time

+

+ # Evaluate all responses

+ run_results = []

+ for response_text, _ in responses:

+ if response_text is not None:

+ processed_response = remove_thinking_blocks(response_text)

+ is_correct = evaluate_response(

+ processed_response,

+ example['answer'],

+ example['category'],

+ example['question']

+ )

+ run_results.append(is_correct)

+ else:

+ run_results.append(False) # Failed responses count as incorrect

+

+ # Calculate success rate based on approach

+ if approach.startswith("avg@"):

+ # Average success rate

+ success_rate = sum(run_results) / len(run_results) if run_results else 0

+ elif approach.startswith("pass@"):

+ # Pass@k: success if ANY response is correct

+ success_rate = 1.0 if any(run_results) else 0.0

+ else:

+ # Shouldn't reach here, but default to average

+ success_rate = sum(run_results) / len(run_results) if run_results else 0

+

+ # Update metrics with average

+ metrics["total_correct"] += success_rate

+ metrics["total_time"] += time_taken

+ metrics["samples"] += 1

+

+ # Update category metrics

+ if example['category'] not in category_metrics:

+ category_metrics[example['category']] = {

+ "correct": 0,

+ "total": 0,

+ "time": 0

+ }

+ category_metrics[example['category']]["correct"] += success_rate

+ category_metrics[example['category']]["total"] += 1

+ category_metrics[example['category']]["time"] += time_taken

+

+ # Record detailed result

+ detailed_results.append({

+ "id": example['id'],

+ "category": example['category'],

+ "correct": success_rate, # Store success rate

+ "n_param": n_param,

+ "successes": sum(run_results),

+ "time_taken": time_taken,

+ "ground_truth": example['answer']

+ })

+

+ except Exception as e:

+ logger.error(f"Error processing example {example['id']}: {e}")

+ # Count failed examples as incorrect

+ metrics["total_correct"] += 0

+ metrics["total_time"] += 0

+ metrics["samples"] += 1

+

+ # Update category metrics for failed example

+ if example['category'] not in category_metrics:

+ category_metrics[example['category']] = {

+ "correct": 0,

+ "total": 0,

+ "time": 0

+ }

+ category_metrics[example['category']]["correct"] += 0

+ category_metrics[example['category']]["total"] += 1

+ category_metrics[example['category']]["time"] += 0

+

+ # Record detailed result for failed example

+ detailed_results.append({

+ "id": example['id'],

+ "category": example['category'],

+ "correct": False,

+ "time_taken": 0,

+ "raw_response": f"ERROR: {str(e)}",

+ "processed_response": None,

+ "has_thinking": False,

+ "ground_truth": example['answer'],

+ "error": str(e)

+ })

+ continue

+

+ # For pass@1, we need to run multiple times and calculate average

+ elif num_runs > 1:

+ run_results = []

+ total_run_time = 0

- # Process the response to remove thinking blocks

- processed_response = remove_thinking_blocks(response_text)

+ for run_idx in range(num_runs):

+ try:

+ # Get appropriate prompt for the category

+ prompt = get_prompt_for_category(example['question'], example['category'])

+

+ # Record start time

+ start_time = time.time()

+

+ # Prepare extra_body parameters (excluding num_runs and approach)

+ extra_body = {"spl_learning": False}

+ if approach_extra_body:

+ extra_body_clean = {k: v for k, v in approach_extra_body.items() if k not in ["num_runs", "approach"]}

+ extra_body.update(extra_body_clean)

+

+ # Make API call

+ response = client.chat.completions.create(

+ model=full_model_name,

+ messages=[

+ {"role": "system", "content": "You are a helpful AI assistant focused on providing precise answers in the requested format."},

+ {"role": "user", "content": prompt}

+ ],

+ temperature=0.6, # Unified temperature for all approaches

+ max_tokens=4096,

+ extra_body=extra_body,

+ )

+

+ # Calculate time taken

+ time_taken = time.time() - start_time

+ total_run_time += time_taken

+

+ # Get the response text

+ response_text = response.choices[0].message.content

+

+ # Process the response to remove thinking blocks

+ processed_response = remove_thinking_blocks(response_text)

+

+ # Evaluate the processed response

+ is_correct = evaluate_response(

+ processed_response,

+ example['answer'],

+ example['category'],

+ example['question']

+ )

+

+ run_results.append(is_correct)

+

+ except Exception as e:

+ logger.error(f"Error in run {run_idx+1} for example {example['id']}: {e}")

+ run_results.append(False)

- # Evaluate the processed response

- is_correct = evaluate_response(

- processed_response,

- example['answer'],

- example['category'],

- example['question'] # Pass the question for MMLU evaluation

- )

+ # Calculate average success rate for this example

+ success_rate = sum(run_results) / len(run_results) if run_results else 0

+ avg_time = total_run_time / len(run_results) if run_results else 0

- # Update metrics

- metrics["total_correct"] += int(is_correct)

- metrics["total_time"] += time_taken

+ # Update metrics with average

+ metrics["total_correct"] += success_rate

+ metrics["total_time"] += avg_time

metrics["samples"] += 1

# Update category metrics

@@ -388,28 +573,130 @@ def evaluate_model(

"total": 0,

"time": 0

}

- category_metrics[example['category']]["correct"] += int(is_correct)

+ category_metrics[example['category']]["correct"] += success_rate

category_metrics[example['category']]["total"] += 1

- category_metrics[example['category']]["time"] += time_taken

-

- # Check if thinking blocks were removed

- has_thinking = '' in raw_response

+ category_metrics[example['category']]["time"] += avg_time

# Record detailed result

detailed_results.append({

"id": example['id'],

"category": example['category'],

- "correct": is_correct,

- "time_taken": time_taken,

- "raw_response": raw_response,

- "processed_response": processed_response if has_thinking else None,

- "has_thinking": has_thinking,

+ "correct": success_rate, # Store success rate instead of boolean

+ "num_runs": num_runs,

+ "successes": sum(run_results),

+ "time_taken": avg_time,

"ground_truth": example['answer']

})

- except Exception as e:

- logger.error(f"Error processing example {example['id']}: {e}")

- continue

+ else:

+ # Single run (original logic)

+ try:

+ # Get appropriate prompt for the category

+ prompt = get_prompt_for_category(example['question'], example['category'])

+

+ # Record start time

+ start_time = time.time()

+

+ # Prepare extra_body parameters (excluding approach)

+ extra_body = {"spl_learning": False}

+ if approach_extra_body:

+ extra_body_clean = {k: v for k, v in approach_extra_body.items() if k != "approach"}

+ extra_body.update(extra_body_clean)

+

+ # Make API call

+ response = client.chat.completions.create(

+ model=full_model_name,

+ messages=[

+ {"role": "system", "content": "You are a helpful AI assistant focused on providing precise answers in the requested format."},

+ {"role": "user", "content": prompt}

+ ],

+ temperature=0.6,

+ max_tokens=4096,

+ extra_body=extra_body,

+ )

+

+ # Calculate time taken

+ time_taken = time.time() - start_time

+

+ # Get the response text

+ response_text = response.choices[0].message.content

+

+ # Also store the raw response for reference

+ raw_response = response_text

+

+ # Process the response to remove thinking blocks

+ processed_response = remove_thinking_blocks(response_text)

+

+ # Evaluate the processed response

+ is_correct = evaluate_response(

+ processed_response,

+ example['answer'],

+ example['category'],

+ example['question'] # Pass the question for MMLU evaluation

+ )

+

+ # Update metrics

+ metrics["total_correct"] += int(is_correct)

+ metrics["total_time"] += time_taken

+ metrics["samples"] += 1

+

+ # Update category metrics

+ if example['category'] not in category_metrics:

+ category_metrics[example['category']] = {

+ "correct": 0,

+ "total": 0,

+ "time": 0

+ }

+ category_metrics[example['category']]["correct"] += int(is_correct)

+ category_metrics[example['category']]["total"] += 1

+ category_metrics[example['category']]["time"] += time_taken

+

+ # Check if thinking blocks were removed

+ has_thinking = '' in raw_response

+

+ # Record detailed result

+ detailed_results.append({

+ "id": example['id'],

+ "category": example['category'],

+ "correct": is_correct,

+ "time_taken": time_taken,

+ "raw_response": raw_response,

+ "processed_response": processed_response if has_thinking else None,

+ "has_thinking": has_thinking,

+ "ground_truth": example['answer']

+ })

+

+ except Exception as e:

+ logger.error(f"Error processing example {example['id']}: {e}")

+ # Count failed examples as incorrect

+ metrics["total_correct"] += 0 # Failed = incorrect

+ metrics["total_time"] += 0 # No time recorded for failed attempts

+ metrics["samples"] += 1

+

+ # Update category metrics for failed example

+ if example['category'] not in category_metrics:

+ category_metrics[example['category']] = {

+ "correct": 0,

+ "total": 0,

+ "time": 0

+ }

+ category_metrics[example['category']]["correct"] += 0 # Failed = incorrect

+ category_metrics[example['category']]["total"] += 1

+ category_metrics[example['category']]["time"] += 0

+

+ # Record detailed result for failed example

+ detailed_results.append({

+ "id": example['id'],

+ "category": example['category'],

+ "correct": False,

+ "time_taken": 0,

+ "raw_response": f"ERROR: {str(e)}",

+ "processed_response": None,

+ "has_thinking": False,

+ "ground_truth": example['answer'],

+ "error": str(e)

+ })

+ continue

# Calculate final metrics

final_metrics = {

@@ -419,6 +706,13 @@ def evaluate_model(

"total_samples": metrics["samples"],

}

+ # Log summary of failures if any

+ total_expected = len(examples)

+ failures = len([r for r in detailed_results if "error" in r])

+ if failures > 0:

+ logger.warning(f"Approach {approach}: {failures}/{total_expected} examples failed due to errors")

+ logger.warning(f"Failed examples are counted as incorrect in accuracy calculation")

+

# Add category-specific metrics

for category, cat_metrics in category_metrics.items():

final_metrics[f"{category}_accuracy"] = cat_metrics["correct"] / cat_metrics["total"]

@@ -458,12 +752,29 @@ def generate_report(all_metrics: Dict[str, Dict[str, float]], output_dir: str, i

"""Generate a comprehensive report comparing all approaches."""

report = []

+ # Check if this is the default test-time compute evaluation

+ is_default_test_time = set(all_metrics.keys()) == {"avg@5", "pass@5", "maj@5", "genselect@5"}

+

# Header

- report_title = "OptiLLM Bench Test-Time Compute Evaluation Report" if is_test_time_compute else "OptiLLM Bench Evaluation Report"

+ if is_default_test_time:

+ report_title = "OptiLLM Bench Test-Time Compute Evaluation Report"

+ elif is_test_time_compute:

+ report_title = "OptiLLM Bench Test-Time Compute Scaling Report"

+ else:

+ report_title = "OptiLLM Bench Evaluation Report"

+

report.append(f"# {report_title}")

report.append(f"Generated on: {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}\n")

- if is_test_time_compute:

+ if is_default_test_time:

+ report.append("## Test-Time Compute Evaluation Results\n")

+ report.append("This report evaluates the potential of test-time compute with:")

+ report.append("- **avg@5**: Average success rate of 5 parallel responses")

+ report.append("- **pass@5**: Success if ANY of 5 responses is correct")

+ report.append("- **maj@5**: Majority voting with 5 candidates")

+ report.append("- **genselect@5**: Quality-based selection from 5 candidates\n")

+ report.append("All approaches use n=5 parallel generation (with sequential fallback) for fair comparison.\n")

+ elif is_test_time_compute:

report.append("This report evaluates test-time compute scaling approaches:")

report.append("- **Sequential scaling**: ThinkDeeper with varying thinking token budgets")

report.append("- **Parallel scaling**: Majority voting with varying k values\n")

@@ -505,6 +816,39 @@ def generate_report(all_metrics: Dict[str, Dict[str, float]], output_dir: str, i

df = pd.DataFrame(rows, columns=headers)

report.append(df.to_markdown())

+ # Add summary section for default test-time compute

+ if is_default_test_time:

+ report.append("\n## Summary")

+ if all(metric in all_metrics for metric in ["avg@5", "pass@5", "maj@5", "genselect@5"]):

+ avg5_acc = all_metrics["avg@5"]["accuracy"] * 100

+ pass5_acc = all_metrics["pass@5"]["accuracy"] * 100

+ maj5_acc = all_metrics["maj@5"]["accuracy"] * 100

+ genselect5_acc = all_metrics["genselect@5"]["accuracy"] * 100

+

+ report.append(f"\n**Key Metrics:**")

+ report.append(f"- **avg@5** (average of 5 responses): {avg5_acc:.2f}%")

+ report.append(f"- **pass@5** (success if any correct): {pass5_acc:.2f}%")

+ report.append(f"- **maj@5** (majority voting): {maj5_acc:.2f}%")

+ report.append(f"- **genselect@5** (quality-based selection): {genselect5_acc:.2f}%")

+

+ # Calculate improvements over baseline (avg@5)

+ if avg5_acc > 0:

+ pass_improvement = ((pass5_acc - avg5_acc) / avg5_acc) * 100

+ maj_improvement = ((maj5_acc - avg5_acc) / avg5_acc) * 100

+ genselect_improvement = ((genselect5_acc - avg5_acc) / avg5_acc) * 100

+

+ report.append(f"\n**Improvements over avg@5 baseline:**")

+ report.append(f"- pass@5: {'+' if pass_improvement > 0 else ''}{pass_improvement:.1f}%")

+ report.append(f"- maj@5: {'+' if maj_improvement > 0 else ''}{maj_improvement:.1f}%")

+ report.append(f"- genselect@5: {'+' if genselect_improvement > 0 else ''}{genselect_improvement:.1f}%")

+

+ # Show variance indicator

+ if pass5_acc > avg5_acc:

+ variance_ratio = (pass5_acc - avg5_acc) / avg5_acc * 100

+ report.append(f"\n**Response Variance Indicator:**")

+ report.append(f"- Gap between pass@5 and avg@5: {variance_ratio:.1f}%")

+ report.append(f"- This indicates {'high' if variance_ratio > 50 else 'moderate' if variance_ratio > 20 else 'low'} variance in response quality")

+

# Save report

report_path = f"{output_dir}/evaluation_report.md"

with open(report_path, "w") as f:

@@ -513,7 +857,9 @@ def generate_report(all_metrics: Dict[str, Dict[str, float]], output_dir: str, i

logger.info(f"Report saved to {report_path}")

def main():

- parser = argparse.ArgumentParser(description="Evaluate a model on OptiLLM Bench")

+ parser = argparse.ArgumentParser(

+ description="Evaluate a model on OptiLLM Bench. By default, runs test-time compute evaluation with pass@1, maj@64, and genselect@64."

+ )

parser.add_argument("--model", required=True, help="Model identifier")

parser.add_argument("--base-url", default="http://localhost:8000/v1",

help="Base URL for API endpoint")

@@ -521,9 +867,9 @@ def main():

parser.add_argument("--output-dir", default="results",

help="Directory to save results")

parser.add_argument("--approaches", nargs="+",

- help="Specific approaches to evaluate (default: all)")

+ help="Specific approaches to evaluate (overrides default test-time compute)")

parser.add_argument("--test-time-compute", action="store_true",

- help="Evaluate test-time compute approaches (sequential and parallel scaling)")

+ help="Evaluate full test-time compute scaling approaches (ThinkDeeper and various k values)")

parser.add_argument("--debug", action="store_true", help="Enable debug logging")

args = parser.parse_args()

@@ -555,12 +901,26 @@ def main():

if args.approaches:

# Filter test-time compute approaches if specific ones are requested

approaches_config = [a for a in TEST_TIME_COMPUTE_APPROACHES if a[0] in args.approaches]

+ elif args.approaches:

+ # Specific approaches requested - check all available approach lists

+ all_available_approaches = APPROACHES + TEST_TIME_COMPUTE_APPROACHES + DEFAULT_TEST_TIME_COMPUTE

+ approaches_config = []

+ for requested_approach in args.approaches:

+ found = False

+ for approach_tuple in all_available_approaches:

+ if approach_tuple[0] == requested_approach:

+ if approach_tuple not in approaches_config: # Avoid duplicates

+ approaches_config.append(approach_tuple)

+ found = True

+ break

+ if not found:

+ logger.warning(f"Approach '{requested_approach}' not found in any configuration")

+ if not approaches_config:

+ raise ValueError(f"No valid approaches found. Requested: {args.approaches}")

else:

- # Use standard approaches

- if args.approaches:

- approaches_config = [a for a in APPROACHES if a[0] in args.approaches]

- else:

- approaches_config = APPROACHES

+ # Default: Use the default test-time compute configuration

+ approaches_config = DEFAULT_TEST_TIME_COMPUTE

+ logger.info("Using default test-time compute evaluation (avg@5, pass@5, maj@5, genselect@5)")

# Store all metrics for final report

all_metrics = {}

@@ -596,7 +956,9 @@ def main():

continue

# Generate final report

- generate_report(all_metrics, args.output_dir, args.test_time_compute)

+ # Determine if we're using default test-time compute or explicit test-time compute

+ is_test_time = args.test_time_compute or (not args.approaches and approaches_config == DEFAULT_TEST_TIME_COMPUTE)

+ generate_report(all_metrics, args.output_dir, is_test_time)

if __name__ == "__main__":

main()

\ No newline at end of file

diff --git a/test_results.json b/test_results.json

deleted file mode 100644

index 8ad21a82..00000000

--- a/test_results.json

+++ /dev/null

@@ -1,128 +0,0 @@

-[

- {

- "test_case": {

- "name": "Arena Bench Hard",

- "system_prompt": "",

- "query": "Write a Python program to build an RL model to recite text from any position that the user provides, using only numpy."

- },

- "results": [

- {

- "approach": "cepo",

- "result": [

- "Certainly! Below is a complete Python program that implements a reinforcement learning (RL) model to recite text from any position specified by the user, using only NumPy. The program includes the necessary classes and functions to create the environment, train the agent, and allow user interaction.\n\n```python\nimport numpy as np\n\nclass TextEnvironment:\n def __init__(self, text):\n self.text = text\n self.state = 0 # Starting position\n self.done = False\n\n def reset(self):\n self.state = 0\n self.done = False\n return self.state\n\n def step(self, action):\n if action == 0: # Recite next character\n if self.state < len(self.text) - 1:\n self.state += 1\n else:\n self.done = True\n elif action == 1: # Move backward\n if self.state > 0:\n self.state -= 1\n elif action == 2: # Stop\n self.done = True\n \n return self.state, self.done\n\nclass QLearningAgent:\n def __init__(self, state_size, action_size, learning_rate=0.1, discount_factor=0.9):\n self.q_table = np.zeros((state_size, action_size))\n self.learning_rate = learning_rate\n self.discount_factor = discount_factor\n\n def choose_action(self, state, epsilon):\n if np.random.rand() < epsilon: # Explore\n return np.random.randint(3) # Random action\n else: # Exploit\n return np.argmax(self.q_table[state]) # Best action\n\n def update_q_value(self, state, action, reward, next_state):\n best_next_action = np.argmax(self.q_table[next_state])\n td_target = reward + self.discount_factor * self.q_table[next_state][best_next_action]\n self.q_table[state][action] += self.learning_rate * (td_target - self.q_table[state][action])\n\ndef user_interface(text):\n print(\"Text: \", text)\n start_position = int(input(\"Enter the starting position (0 to {}): \".format(len(text) - 1)))\n return start_position\n\ndef train_agent(agent, env, episodes, epsilon):\n for episode in range(episodes):\n state = env.reset()\n total_reward = 0\n \n while not env.done:\n action = agent.choose_action(state, epsilon)\n next_state, done = env.step(action)\n reward = 1 if action == 0 else 0 # Reward for reciting the next character\n agent.update_q_value(state, action, reward, next_state)\n state = next_state\n total_reward += reward\n \n env.done = False # Reset done for the next episode\n print(f\"Episode {episode + 1}/{episodes}, Total Reward: {total_reward}\")\n\ndef test_agent(agent, env, start_position):\n env.state = start_position\n while not env.done:\n action = np.argmax(agent.q_table[env.state]) # Choose the best action\n next_state, done = env.step(action)\n if action == 0: # Recite next character\n print(env.text[env.state], end='', flush=True)\n env.state = next_state\n print() # New line after reciting\n\nif __name__ == \"__main__\":\n text = \"Hello, this is a reinforcement learning model to recite text.\"\n env = TextEnvironment(text)\n agent = QLearningAgent(state_size=len(text), action_size=3)\n\n # Train the agent\n train_agent(agent, env, episodes=1000, epsilon=0.1)\n\n # User interaction\n start_position = user_interface(text)\n print(\"Reciting from position {}: \".format(start_position), end='')\n test_agent(agent, env, start_position)\n```\n\n### Explanation of the Program\n\n1. **TextEnvironment Class**: This class manages the text and the current position of the agent. It provides methods to reset the environment and to take a step based on the action chosen by the agent.\n\n2. **QLearningAgent Class**: This class implements the Q-learning algorithm. It maintains a Q-table to store the expected rewards for each state-action pair and includes methods for choosing actions and updating Q-values based on the agent's experiences.\n\n3. **User Interface**: The `user_interface` function displays the text and prompts the user to enter a starting position from which they want the agent to recite the text.\n\n4. **Training Function**: The `train_agent` function trains the agent over a specified number of episodes, allowing it to learn the best actions to take in the environment.\n\n5. **Testing Function**: The `test_agent` function allows the agent to recite the text starting from the user-defined position based on the learned Q-values.\n\n6. **Main Execution Block**: The program initializes the text, environment, and agent, trains the agent, and then prompts the user for a starting position to recite the text.\n\n### How to Run the Program\n1. Copy the code into a Python environment (e.g., Jupyter Notebook, Python script).\n2. Run the program. It will train the agent and then ask for a starting position.\n3. Enter a valid starting position (between 0 and the length of the text minus one) to see the agent recite the text from that position.",

- 24709

- ],

- "time": 496.36777901649475,

- "status": "success"

- }

- ]

- },

- {

- "test_case": {

- "name": "Big Code Bench",

- "system_prompt": "",

- "query": "Calculates the average of the sums of absolute differences between each pair of consecutive numbers for all permutations of a given list. Each permutation is shuffled before calculating the differences. Args: - numbers (list): A list of numbers. Default is numbers from 1 to 10. The function should output with: float: The average of the sums of absolute differences for each shuffled permutation of the list. You should write self-contained code starting with: ``` import itertools from random import shuffle def task_func(numbers=list(range(1, 3))): ```"

- },

- "results": [

- {

- "approach": "cepo",

- "result": [

- "Certainly! Below is the self-contained code that calculates the average of the sums of absolute differences between each pair of consecutive numbers for all permutations of a given list. Each permutation is shuffled before calculating the differences, as per your request.\n\n```python\nimport itertools\nfrom random import shuffle\n\ndef task_func(numbers=list(range(1, 11))): # Default is numbers from 1 to 10\n all_permutations = list(itertools.permutations(numbers)) # Generate all permutations\n total_sum = 0 # Initialize total sum of absolute differences\n num_permutations = len(all_permutations) # Count of permutations\n\n for perm in all_permutations: # Iterate through each permutation\n shuffled_perm = list(perm) # Convert to list to shuffle\n shuffle(shuffled_perm) # Shuffle the current permutation\n \n # Calculate the sum of absolute differences for the shuffled permutation\n abs_diff_sum = sum(abs(shuffled_perm[i] - shuffled_perm[i + 1]) for i in range(len(shuffled_perm) - 1))\n total_sum += abs_diff_sum # Accumulate the sum\n\n # Calculate the average of the sums of absolute differences\n average_difference = total_sum / num_permutations if num_permutations > 0 else 0\n \n return float(average_difference) # Return the average as a float\n\n# Example usage\nresult = task_func()\nprint(result)\n```\n\n### Explanation of the Code:\n1. **Imports**: We import `itertools` for generating permutations and `shuffle` from `random` to shuffle the permutations.\n2. **Function Definition**: The function `task_func` takes a list of numbers, defaulting to numbers from 1 to 10.\n3. **Generate Permutations**: We generate all permutations of the input list.\n4. **Initialize Variables**: We initialize `total_sum` to accumulate the sums of absolute differences and `num_permutations` to count the total permutations.\n5. **Iterate Through Permutations**: For each permutation, we convert it to a list and shuffle it.\n6. **Calculate Absolute Differences**: We calculate the sum of absolute differences between consecutive elements in the shuffled permutation.\n7. **Accumulate the Total**: We add the calculated sum to `total_sum`.\n8. **Calculate Average**: After processing all permutations, we calculate the average of the sums of absolute differences.\n9. **Return Result**: Finally, we return the average as a float.\n\nYou can run this code in a Python environment to see the final result.",

- 14906

- ],

- "time": 318.84958386421204,

- "status": "success"

- }

- ]

- },

- {

- "test_case": {

- "name": "Maths Problem",

- "system_prompt": "You are a helpful AI assistant with expertise in mathematical and logical problem-solving.",

- "query": "Maximize x + y subject to: x + 2y <= 10, x >= 0, y >= 0"

- },

- "results": [

- {

- "approach": "cepo",

- "result": [

- "To maximize \\( z = x + y \\) subject to the constraints:\n\n1. \\( x + 2y \\leq 10 \\)\n2. \\( x \\geq 0 \\)\n3. \\( y \\geq 0 \\)\n\n### Step 1: Identify the Constraints\nThe constraints define a feasible region in the first quadrant of the Cartesian plane.\n\n### Step 2: Graph the Constraints\nTo graph the line defined by the constraint \\( x + 2y = 10 \\):\n- **Find the x-intercept**: Set \\( y = 0 \\):\n \\[\n x + 2(0) = 10 \\implies x = 10 \\quad \\text{(Point: (10, 0))}\n \\]\n- **Find the y-intercept**: Set \\( x = 0 \\):\n \\[\n 0 + 2y = 10 \\implies y = 5 \\quad \\text{(Point: (0, 5))}\n \\]\n\n### Step 3: Determine the Feasible Region\nThe feasible region is bounded by:\n- The line \\( x + 2y = 10 \\)\n- The x-axis (where \\( y = 0 \\))\n- The y-axis (where \\( x = 0 \\))\n\nThe feasible region is the area in the first quadrant below the line segment connecting (10, 0) and (0, 5).\n\n### Step 4: Identify the Corner Points\nThe corner points (vertices) of the feasible region are:\n1. \\( (0, 0) \\) (intersection of the axes)\n2. \\( (10, 0) \\) (x-intercept of the line)\n3. \\( (0, 5) \\) (y-intercept of the line)\n\n### Step 5: Evaluate the Objective Function at Each Corner Point\nNow we will evaluate \\( z = x + y \\) at each of the corner points:\n\n1. At \\( (0, 0) \\):\n \\[\n z = 0 + 0 = 0\n \\]\n\n2. At \\( (10, 0) \\):\n \\[\n z = 10 + 0 = 10\n \\]\n\n3. At \\( (0, 5) \\):\n \\[\n z = 0 + 5 = 5\n \\]\n\n### Step 6: Determine the Maximum Value\nNow we compare the values obtained:\n- At \\( (0, 0) \\): \\( z = 0 \\)\n- At \\( (10, 0) \\): \\( z = 10 \\)\n- At \\( (0, 5) \\): \\( z = 5 \\)\n\nThe maximum value of \\( z \\) is \\( 10 \\), which occurs at the point \\( (10, 0) \\).\n\n### Final Answer\nThe maximum value of \\( x + y \\) subject to the given constraints is **10**, which occurs at the point \\( (10, 0) \\).",

- 14387

- ],

- "time": 341.3320279121399,

- "status": "success"

- }

- ]

- },

- {

- "test_case": {

- "name": "GSM8K",

- "system_prompt": "",

- "query": "If there are 3 cars in the parking lot and 2 more cars arrive, how many cars are in the parking lot?"

- },

- "results": [

- {

- "approach": "cepo",

- "result": [

- "If there are 3 cars in the parking lot and 2 more cars arrive, the total number of cars in the parking lot is:\n\n\\[\n3 + 2 = 5\n\\]\n\nTherefore, there are **5 cars** in the parking lot.",

- 5727

- ],

- "time": 139.8697202205658,

- "status": "success"

- }

- ]

- },

- {

- "test_case": {

- "name": "r/LocalLLaMA",

- "system_prompt": "",

- "query": "I have a dish of potatoes. The following statements are true: No potatoes of mine, that are new, have >been boiled. All my potatoes in this dish are fit to eat. No unboiled potatoes of mine are fit to eat. Are there any new potatoes in this dish?"

- },

- "results": [

- {

- "approach": "cepo",

- "result": [

- "Based on the analysis of the statements provided:\n\n1. **No potatoes of mine that are new have been boiled.** (This means new potatoes cannot be boiled.)\n2. **All my potatoes in this dish are fit to eat.** (This indicates that every potato present is edible.)\n3. **No unboiled potatoes of mine are fit to eat.** (This implies that if a potato is unboiled, it cannot be fit to eat.)\n\nFrom these statements, we can conclude the following:\n\n- Since all potatoes in the dish are fit to eat, there cannot be any unboiled potatoes in the dish (as unboiled potatoes are not fit to eat).\n- If there were new potatoes in the dish, they would be unboiled (because new potatoes have not been boiled), which would contradict the fact that all potatoes in the dish are fit to eat.\n\nTherefore, we conclude that there **are no new potatoes in this dish.**",

- 9633

- ],

- "time": 229.47916913032532,

- "status": "success"

- }

- ]

- },

- {

- "test_case": {

- "name": "reddit",

- "system_prompt": "",

- "query": "There are 24 volunteers. Over the next 3 weeks, each volunteer is assigned to a different task. There are 8 tasks. Each week, the volunteers switch tasks. Each task has 3 volunteers assigned to it. Volunteers cannot be assigned to the same task more than once, and volunteers cannot share the same task more than once."

- },

- "results": [

- {

- "approach": "cepo",

- "result": [

- "Based on the constraints provided, here is the final assignment schedule for the 24 volunteers over the next 3 weeks, ensuring that each volunteer is assigned to a different task each week, with no repetitions of tasks or sharing of tasks among the same volunteers.\n\n### Final Assignment Schedule\n\n#### Week 1\n| Task | Volunteers |\n|------|------------------|\n| T1 | V1, V2, V3 |\n| T2 | V4, V5, V6 |\n| T3 | V7, V8, V9 |\n| T4 | V10, V11, V12 |\n| T5 | V13, V14, V15 |\n| T6 | V16, V17, V18 |\n| T7 | V19, V20, V21 |\n| T8 | V22, V23, V24 |\n\n#### Week 2\n| Task | Volunteers |\n|------|------------------|\n| T1 | V1, V4, V7 |\n| T2 | V2, V5, V8 |\n| T3 | V3, V6, V9 |\n| T4 | V10, V13, V16 |\n| T5 | V11, V14, V17 |\n| T6 | V12, V15, V18 |\n| T7 | V19, V22, V24 |\n| T8 | V20, V21, V23 |\n\n#### Week 3\n| Task | Volunteers |\n|------|------------------|\n| T1 | V4, V7, V10 |\n| T2 | V5, V8, V11 |\n| T3 | V6, V9, V12 |\n| T4 | V13, V16, V19 |\n| T5 | V14, V17, V20 |\n| T6 | V15, V18, V21 |\n| T7 | V22, V24, V1 |\n| T8 | V23, V2, V3 |\n\n### Summary of Constraints Met\n1. **Unique Task Assignment**: Each volunteer is assigned to a different task each week.\n2. **No Repeated Tasks**: No volunteer is assigned to the same task more than once over the 3 weeks.\n3. **No Shared Tasks**: No group of volunteers shares the same task more than once.\n\nThis schedule effectively meets all the requirements outlined in the question.",

- 23518

- ],

- "time": 547.4458949565887,

- "status": "success"

- }

- ]

- },

- {

- "test_case": {

- "name": "GH",

- "system_prompt": "",

- "query": "Find the largest possible real part of[(75+117i)z+\frac{96+144i}{z}]where z is a complex number with |z|=4"

- },

- "results": [

- {

- "approach": "cepo",

- "result": [

- "To find the largest possible real part of the expression \n\n\\[\n(75 + 117i)z + \\frac{96 + 144i}{z}\n\\]\n\nwhere \\( z \\) is a complex number with \\( |z| = 4 \\), we can follow the steps outlined previously.\n\n### Step 1: Express \\( z \\) in polar form\nSince \\( |z| = 4 \\), we can express \\( z \\) as:\n\\[\nz = 4e^{i\\theta}\n\\]\nwhere \\( \\theta \\) is the argument of \\( z \\).\n\n### Step 2: Substitute \\( z \\) into the expression\nSubstituting \\( z \\) into the expression gives:\n\\[\n(75 + 117i)(4e^{i\\theta}) + \\frac{96 + 144i}{4e^{i\\theta}}.\n\\]\nThis simplifies to:\n\\[\n4(75 + 117i)e^{i\\theta} + \\frac{96 + 144i}{4} e^{-i\\theta}.\n\\]\nCalculating \\(\\frac{96 + 144i}{4}\\):\n\\[\n\\frac{96 + 144i}{4} = 24 + 36i.\n\\]\nThus, the expression becomes:\n\\[\n4(75 + 117i)e^{i\\theta} + (24 + 36i)e^{-i\\theta}.\n\\]\n\n### Step 3: Expand the expression\nNow we can express this as:\n\\[\n= 4(75\\cos \\theta + 117i\\cos \\theta + 75i\\sin \\theta - 117\\sin \\theta) + (24\\cos \\theta + 36i\\cos \\theta - 24i\\sin \\theta - 36\\sin \\theta).\n\\]\nCombining the real and imaginary parts:\n- Real part: \n\\[\n4(75\\cos \\theta - 117\\sin \\theta) + (24\\cos \\theta - 36\\sin \\theta)\n\\]\n- Imaginary part: \n\\[\n4(117\\cos \\theta + 75\\sin \\theta) + 36\\cos \\theta - 24\\sin \\theta\n\\]\n\nThus, the real part \\( R \\) is:\n\\[\nR = 4(75\\cos \\theta - 117\\sin \\theta) + (24\\cos \\theta - 36\\sin \\theta)\n\\]\n\\[\n= (300 + 24)\\cos \\theta + (-468 - 36)\\sin \\theta\n\\]\n\\[\n= 324\\cos \\theta - 504\\sin \\theta.\n\\]\n\n### Step 4: Maximize the real part\nTo maximize \\( R(\\theta) = 324\\cos \\theta - 504\\sin \\theta \\), we can rewrite it in the form:\n\\[\nR(\\theta) = A\\cos(\\theta + \\phi)\n\\]\nwhere:\n- \\( A = \\sqrt{(324)^2 + (-504)^2} \\)\n- \\( \\tan \\phi = \\frac{-504}{324} \\)\n\nCalculating \\( A \\):\n\\[\nA = \\sqrt{324^2 + 504^2} = \\sqrt{104976 + 254016} = \\sqrt{358992} = 598.32 \\text{ (approximately)}.\n\\]\n\n### Step 5: Find the maximum value\nThe maximum value of \\( R(\\theta) \\) occurs when \\( \\cos(\\theta + \\phi) = 1 \\):\n\\[\n\\text{Maximum } R = A = 598.32.\n\\]\n\n### Conclusion\nThus, the largest possible real part of the expression \\((75 + 117i)z + \\frac{96 + 144i}{z}\\) where \\(|z| = 4\\) is approximately:\n\n\\[\n\\boxed{598.32}.\n\\] \n\nThis is the correct answer to the question.",

- 19637

- ],

- "time": 407.97162795066833,

- "status": "success"

- }

- ]

- }

-]

\ No newline at end of file

diff --git a/tests/README.md b/tests/README.md

new file mode 100644

index 00000000..ecde79cb

--- /dev/null

+++ b/tests/README.md

@@ -0,0 +1,107 @@

+# OptILLM Tests

+

+This directory contains tests for the OptILLM project.

+

+## Structure

+

+- `test.py` - Main comprehensive test suite for all OptILLM approaches

+- `test_cases.json` - Test cases for the main test suite

+- `test_plugins.py` - Unit tests for plugin functionality

+- `test_api_compatibility.py` - Tests for OpenAI API compatibility

+- `test_n_parameter.py` - Tests for n parameter functionality (multiple completions)

+- `test_approaches.py` - Unit tests for approach modules (no model inference required)

+- `test_ci_quick.py` - Quick CI tests for imports and basic functionality

+- `run_tests.sh` - Convenience script to run all tests

+- `requirements.txt` - Test dependencies (pytest, etc.)

+

+## Running Tests

+

+### Prerequisites

+

+1. Install test dependencies:

+ ```bash

+ pip install -r tests/requirements.txt

+ ```

+

+2. Start the OptILLM server:

+ ```bash

+ python optillm.py

+ ```

+

+### Run All Tests

+